In a business meeting the other day, a rep for a Bitcoin mining company said that there’s a rule that “Bitcoin always makes huge gains within 12 months of a halving (a Bitcoin event which cuts the reward for Bitcoin miners in half, every four years)”. I asked what was the logic behind the rule — he shrugged and said, “no logic, but it always happens.” After the first halving in 2012 BTC rose from $13 to a following year peak of $1152, or a return of about 8769% (88X); the 2016 halving saw a return of “only” 26X ($664 to $17,760), in 2020, it was about 6X (from $9,734 to $67,549). The date of the fourth halving was April 19th, 2024 — Bitcoin was around $65,000; in late October, when I started this article, it hovered around $68,000 — an increase of a mere 5% (not even a measly 5X); definitely not what the Bitcoin community was expecting (but there’s still another six months to go). The rep cheerfully admitted that there was no logic other than the historical relationship, while leaning heavily on the history, as if to say “who are you to say this isn’t a thing?” Of course there have only been three (now four) observations, which is hardly significant.

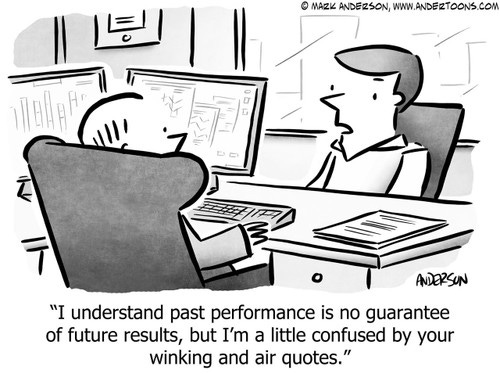

But the point of the story isn’t about Bitcoin, and its halving process [or the all-time high of over $90K it has made post Trump’s election!], it’s about linear thinking layered on empirical evidence. When we are making investments, we are constantly reminded that “past performance is no guarantee of future returns” and yet our natural tendency is to do exactly that: linearly extrapolate historical patterns into the future.

At the same time, we also have a tendency to focus on the historical details which support the particular point we want to press home — and ignore the data which might muddy the waters. When I was a research analyst, we joked that our job was to “torture the data until it told the story we wanted to tell.” And the data often tells inconvenient stories. With the Bitcoin halving example, for instance, astute readers would notice a pronounced decline from the halving high to the next halving ($1152 to $664 before the 2016 event, $17,760 to $9,734 before the 2020 event) which stands in contrast to the 2024 cycle ($67,549 to $65,000) which implies ‘things might be different this time’ because the usual price reset hasn’t happened (or the entire market is already fully aware of the halving “logic” and has already discounted it in advance).

Deterministic vs non-deterministic processes

When assessing future outcomes, it’s important to assess whether a process is deterministic or non-deterministic (a term which I use to mean the opposite of deterministic, not the technical computer science term). I think our natural default is to think most things are deterministic processes, like math or physics where the past outcomes are good indicators future outcomes; rather than a non-deterministic processes, where future outcomes are not necessarily extrapolatable from past observations. Sometimes people use ‘stochastic’ or ‘probabilistic’ as the opposite of deterministic, but I prefer non-deterministic because the other terms ascribe randomness to the process which, in terms of financial markets, isn’t really appropriate. Fundamentally, markets incorporate mass psychology and game theory which just happen to look random (which is why return distributions for most assets are similar to, yet statistically different from, normal distributions).

For example: someone once noticed a January effect in the 1940s, where the US market would tend to go up more than average every January, potentially because investors would wait until the new calendar year in order to trade for tax reasons. But over time, the ‘January effect’ has disappeared, because anything systematic would be arbitraged away by traders front-running any potential excess return (implying the January effect would eventually be seen in December). Generally speaking, when people, psychology, human decisions, and game theory are involved, processes become non-deterministic. There are some physical processes like race conditions in computer logic where minute variations can create (undesirable) variable outputs, but overall, the physical world is largely deterministic.

This difference is important because it directly speaks to the ability of the process to connect historical data to future observations. Even without knowing about the variability of the seasons, the time of sunrise on any given day is a reasonable approximation for sunrise the following day; but processes like traffic are non-deterministic because they have feedback loops (information about crowdedness on Google Maps, for instance) and individual optimization functions can cause people to seek other times to travel, changing the distribution of traffic in a non-deterministic way (even if the larger trend is predictable).

Single factor causation fallacies

It’s American football season, so I’ve been watching sports commentary — not known for their infallible logic, but perhaps a useful perspective into the mind of the logic of the ordinary Joe. Pushing their analytics jockeys to find supportive statistics for their favorite teams, they discover all sorts of arcana — aging NY Jets QB Aaron Rodgers has never lost on Halloween (he was 1-0 until this year, now he’s 2-0) and he has an above average record on Thursday night — without ever discussing the logic of why that statistic makes any sense at all — is Rodgers spookier on Halloween? Or is it just a random coincidence?). More importantly, is a two game trend the best statistic Jets fans can cite?

Most of the ‘logic’ is linear and pseudo-deterministic, even though teams of people are involved, so the processes are definitely non-deterministic. One example: the statistic of Lamar Jackson (quarterback, Baltimore Ravens, in the AFC) having a 23-3 win/loss record (and 23-1 after the first two losses) against NFC teams might be a function of non-familiarity, that he is such a unique player that opposing teams without much experience playing him might be at a distinct disadvantage — but this is a pattern that goes back six years, and in football there are a great deal of other factors, allowing for upsets with regular frequency. To be fair, against AFC teams Jackson has a record of 46-24, so the law of large numbers suggests an anomaly, compared to the silly 2 game Aaron Rodgers Halloween statistic.

In both sports and finance, we use the language of statistics (with its T-stats and p-values, more appropriate for deterministic processes) for non-deterministic processes for two reasons: a) because many non-deterministic processes exhibit deterministic characteristics, and b) they haven’t invented a separate language for non-deterministic statistics.

I guess the point here is that sports commentary logic often tends to implicitly assign a single factor to the statistic being presented, while implying a deterministic outcome, when there are a wide range of other factors at work. I wish they would spend more time discussing the logic behind the statistic, rather than the trivial statistic itself.

I’m probably being unnecessarily analytical — perhaps the beer-swigging, nacho-munching audience isn’t concerned with the logic, they just want to hear arguments for or against their favorite teams (and take pleasure when the pundits are dead wrong). That said, now that betting is such an important part of sports, including American football, there is a real economic component for taking one side or the other.

The rise of betting markets

Logic, linear and otherwise, has become more applicable in a world where betting markets are everywhere and it appears possible to bet on nearly anything.

In the recent US presidential election, there was an enormous difference between what the polls were saying (50/50 coin toss) and what the betting markets were saying (overwhelmingly Trump), so if pollsters and their followers thought their data was highly valid, they would be incentivized to bet for Harris, where a 35 cent bet would have paid off $1, compared to a Trump bet where the same dollar of return would have required a bet size of 65 cents. A last minute Iowa poll, apparently from someone historically trustworthy, showed a high likelihood of a Harris victory in the Red state of Iowa, which was so unexpected that it moved the betting markets (most of them) temporarily to near parity, before reverting to the previous 65:35 levels. Ultimately, Trump won Iowa and the swing states convincingly: the poll was completely misleading, and the betting markets were proven correct about the presidential winner. In what seems like an extreme rationalization, the pollster claimed her poll stimulated Republicans to get out and vote to prove her wrong (a non-deterministic outcome based on poll feedback!), which suggests 200,000 Iowans (about 10% of all eligible voters in Iowa) who didn’t plan to vote for Trump got in their American-made pickups and went to the polls rather than watching passively at the local bar (or that Democrats who planned to vote, skipped the polls). I favored the betting markets over the polls in presidential election because of a linear factor: national polls had overestimated Democratic odds for the last three presidential elections in a row because they probably included some methodological bias, and that this skew was likely to manifest itself again.

Betting markets were not right about everything: they had Harris as a strong favorite for the popular vote, a far less important outcome, which she lost.

Psychologically, it must be noted that the idea of a close races is conducive to voter turnout, the same way a likely blowout is conducive to voter apathy, so there is an argument that polls might want to show a race is closer than it really is, to get more people to vote, thereby providing a more accurate picture of the preferences of the population. But polls do not explicitly aim for this outcome, and if, over time, betting markets are seen as superior outcome predictors, this will naturally reduce the weight of polls.

Financial markets are not mere betting markets

To most people, financial markets look quite similar to betting markets, and while there are some similarities, there are a variety of important differences.

Perhaps the most important one are the ‘odds’ — betting markets by definition are, at best, zero sum games (but mostly negative sum games after the institution running the ‘game’ takes their cut). Financial markets also have institutions who take a cut, but they still be positive sum ‘games’ — ie. it is possible (but obviously not guaranteed) for the preponderance of participants to profit. Most stock markets rise over time (especially in the US – yes, that is also selection bias). When a stock market rises, for instance, the majority of investors will find themselves better off, even if some (short sellers, for instance) do not. I would argue that’s the best argument against gambling — that if you want to take risk, it’s far better to do it in a place, like the US equity market, where positive sum outcomes are relatively common, rather than places where positive sum games are unknown, like casinos or online betting marketplaces.

The second major differential is the end-state — betting markets need finite outcomes in order to determine how to pay off the bet. Many financial markets (listed equities, currencies, most ETFs/funds) have no finite end-state — the position continues to fluctuate as long as the position is held (which induces all sorts of weird psychological effects). Yes, some of you might want to point out that some positions, if they involve margin leverage, derivatives, or structured products, can be wiped out — and might be closer to zero/negative sum games.

As I mentioned, nearly all financial disclaimers include the phrase “past performance is no guarantee of future results” — which everyone intuitively understands, and yet when most of us make decisions about markets or fund managers or the assets themselves, we generally assume that what has been successful will continue to be successful (Warren Buffett, for example) and what has not been working will continue in that direction — classic linear deterministic thinking. Yet in the history of financial markets, it tends to be the ones who nail the turning points who garner the glory (perhaps because they are relatively rare): the people who ‘called’ the 2008 Global Financial Crisis, or George Soros when he ‘broke the Bank of England’ in 1992 by betting on the devaluation of the British pound — rather than the millions of investors who just held on to a basic S&P500 or NASDAQ index fund for decades and saw their fortunes multiply (but also had their portfolios marked down during the periodic downturns).

One of my favorite movies, The Family Man, has a little known scene which I always thought embodied logical conflict: at 1:00, Jack’s friends talk about how a new player is going to propel the New Jersey (now Brooklyn) Nets are going to the championship — Jack counters:

“Are you kidding? The Nets suck… (uncomfortable silence, around a group of Net fans)… but they’re due.”

Jack is exercising unbiased linear thinking (because the Nets have sucked for forever, they will always continue to suck) but his Nets fan compadres are perpetually optimistic (socially, switching teams based on performance is disloyal) so he salvages the situation with the only logical reversal (a mean reversion argument) he can muster. I find this scene intriguing, because in real life, people rarely say “X sucks… but they’re due” — unless there is another dimension (a relationship, or other connection) which suspends their usual linear thinking. Sports team affiliations, or relatives or friends who can’t seem to break bad habits, for instance, are an examples of non-logical factors which often counteract the usual linear thinking.

There is, of course, the rare (and often lonely) person who actually look for turning points, who is willing to go against the linear trend of history. I mentioned lonely because linear thinking is usually correct, in little increments, until it is spectacularly wrong (“real estate markets always go up”, for instance).

I love selection bias, because it’s predictable

Selection bias is a very human attribute — we are naturally predisposed to both our own opinions, affiliations, and experience. In the first and second cases, we are often working backwards from the “correct” position to find supporting analytics to support our ex-ante ‘objective’ conclusion, while diminishing or ignoring the arguments against; in the second, we naturally assume that our pool of experience, like the Iowa pollster, is large enough to be statistically significant, when in fact it rarely is.

My favorite story showing the battle against selection bias is about Napoleon Bonaparte, who carried an extensive portable library with him into battle (the equivalent of the Wikipedia of his day), so that when he fought an engagement in a certain place, he would have the benefit of knowing what had happened in previous encounters in the same place. With an eye to avoiding the mistakes of the losers, and gaining the advantages of the victors, his traveling library was one of his tools to skew the odds in his favor, compared to the opposing generals who could only draw upon what was in their heads at the time (selection bias). Sadly, there were not many historical battles fought in Russian winters for him to learn from, during his disastrous campaign of 1812 (lack of data can be another form of selection bias).

Fat doesn’t make you fat

I won’t go into this too deeply, but after many decades of linear brainwashing, I think it’s finally understood that eating fat doesn’t directly translate to getting fatter; overconsumption of any calories (including fat, but especially highly palatable carbohydrates) makes people fat. “Eating fat makes you fat” isn’t even linear thinking, this is more like an identity (the simplest linear relationship), but it’s also wrong / not that simple, and definitely helped the corporate proponents of processed carbohydrates push people to non- or low-fat lifestyles, that indirectly made people, especially Americans, even fatter.

Trying to make sense of our world

The world is a complicated place. To the best of our abilities, we’re trying to make sense of it, without taxing our brains too heavily – so we look for heuristics like linear logic which provides us with useful estimations (which are often socially acceptable as well). The difficulty of more complicated but potentially more accurate heuristics is that they take longer to calculate (and often time to solution is an essential parameter), and they often aren’t demonstrably more effective or correct – so why exert the excess brain power?

I think people dismiss ‘gut feel’ and intuition more often than they should, mostly because the ‘logic’ is not explainable in some ready way. Now that AI has burst onto the scene, however, we can explain our intuition by saying “I’m using my highly trained neural net” in a way that, before AI, would not have been understood. This works much better, of course, if you are the kind of person who, like an AI, assesses your intuitive predictions against real world results, feeds both successes and mistakes back into your neural net, and reads extensively to augment your (limited) personal data sets. And of course, for people whose intuition is correct a lot – then linear logic would assume they will continue to be correct, until the pattern changes. But at least now we have some deeper logic (their well-trained neural net with an efficient feedback loop) rather than mere linear extrapolation.

Final notes

I have nothing against linear logic, it’s an understandable part of our lives. I do have some issues with the blanket use of this kind of logic, however, without some introspection of what might be driving that linearity. Non-deterministic processes, because of their feedback loops and human decision dependencies, often result in surprising outcomes – and so linear logic in this specific area should be subject to more rigorous analysis. As the hype around artificial intelligence continues to grow, the non-deterministic area is one where I think some HI (human intelligence) and intuition may have a marked advantage over AI for some time, because (current) AI algorithms are far more geared to deterministic processes, rather than ones which with embedded non-deterministic elements. That also suggests that human endeavors should be focused on areas with deeply non-deterministic outcomes.